DeepSeek + Dify: A one-article guide to locally deploying an enterprise-grade knowledge base app

For individual developers or tasters who locally want to deploy the DeepSeek There are many options, but when it comes to enterprise deployments, the steps are much more cumbersome.

If a simple fine-tuning of the model is enough to meet our business needs, then using Ollama, LM Studio, GTP4All might be enough to satisfy our claims.However, if custom development of the model is required, native deployment of the model needs to be considered.

This article will walk you through the local deployment of DeepSeek+DifyIn this article, we will discuss how to build your own private knowledge base at zero cost. After learning how to build this article, we can also upload our own personal data, past output articles, diary and all other personal information to the local knowledge base, to create their own personal assistant.

Of course, there can be many other application scenarios, such as: intelligent customer service, intelligent question bank.

In this paper, the following main lines will be followed:

- Installing Docker

- Installation of Ollama

- Install Dify

- Creating Applications

- Creating a Knowledge Base

I. Download and install docker

Website: https://www.docker.com/

Just click Next all the way through to install it, because docker will use hyper-v, and if your computer doesn't have hyper-v enabled, you may need to reboot once.

II. Download ollama

Ollama is an open source localization tool designed to simplify the local running and deployment of Large Language Models (LLMs). It focuses on enabling users to easily run multiple open source language large models (e.g., deepseek , qwen, Llama, Mistral, Gemma, etc.) on their personal computers or servers without having to rely on cloud-based services or complex configuration processes.

Web site:https://ollama.com/

After the installation is complete, the ollama icon will be displayed in the lower right corner of the desktop

III. Installation of the deepseek-r1 model

3.1 Finding the model

In the search box on the home page of ollama's official website, you can see deepseek-r1 in the first position by clicking on it, so you can see how hot it is!

You can see that the models are divided into 1.5b, 7b, 8b, 14b, 32b, 70b, 671b and so on according to the parameters, we need to choose to download the corresponding parameters of the model according to their own computer.

3.2 How do I choose the right model for my computer?

It should be noted that here we need to choose the model size according to their computer hardware configuration, the following is a model size configuration reference table, you can choose according to their own computer configuration, of course, the deployment of the local model, the larger the use of the depth of the search effect is better.

3.3 Installation of the r1 model

ollama run deepseek-r1:8b

After ollama is installed, there is no graphical interface to install the big model, which can be analogized to docker pulling the image, because many of the operating commands are similar

3.4 Testing

Once the installation is complete, it will automatically run the big model and we'll enter a question to test it:

IV. Installation of dedify

Dify official website address:http://difyai.com/

docify official website documentation:https://docs.dify.ai/zh-hans

The github address of the dedify project:https://github.com/langgenius/dify

4.1 Understanding dedify first

Here is a brief introduction to dify, need a detailed understanding of the official website or official documents can see dify.

Dify.AI is an open source big modelapplication developmentThe platform is designed to help developers easily build and operate generative AI native applications. The platform provides a full range of capabilities from Agent construction to AI workflow orchestration, RAG retrieval, model management, and more, enabling developers to focus on creating the core value of their applications without spending too much effort on technical details.

As you can see from the Create Application page, he can create: Chat Assistant, Agent, Text-to-Speech Application, Conversation Workflow, Task Scheduling Workflow, etc.

Supported access to almost all the world's well-known large model vendors.

And of course the domestic deepseek, ollama

He also supports creating knowledge bases from existing text, Notion, web pages and other data sources:

This means that we can deploy enterprise private AI applications by deploying local big models, enterprise knowledge bases, such as: sales customer service for enterprise verticals, HR for training new employees recruited by the enterprise, and educational institutions can deploy private question banks. And flexibly build their own applications through the form of api.

4.2 Download the zip of the dedify project

4.3 Installing the dedify environment

1) Go to the project root directory and find the docker folder

2) .env file renaming

3) Right click to open the command line

4) Run the docker environment

docker compose up -d

At this point, you can go back to the docker desktop client and see that all the environments needed to dedify are already up and running

4.4 Installing dedify

Enter it in the address bar of your browser to install it:

http.//127.0.0.1/install

Then log in to your account

Go to the dedify homepage below:

V. How to correlate local big models with dedify?

Since the dedify in this tutorial is deployed via docker, which means that the project is running inside a docker container, but our ollama is running on the local computer, but they are on the same computer, which means that in order for dedify to be able to access the services provided by ollama, you need to just get the intranet IP of the local computer.

5.1 Obtaining the local intranet IP

Open command line: win+r

Input: cmd

At the command line, type: ipconfig and locate the currently connected network adapter (e.g., "Wireless LAN adapter WLAN" or "Ethernet adapter"). IPv4 address which is the intranet IP (e.g. 192.168.x.x maybe 10.x.x.x)

5.2 Configure the local intranet IP to be inside the docker deployment configuration file for dedify

Locate the docker folder under the diffify project and go to it, having previously set the.env.exampleChanged to.envfile now, and fill in the following configuration at the end:

# Enable custom model CUSTOM_MODEL_ENABLED=true # Specify Ollama's API address (adjust IP based on deployment environment) OLLAMA_API_BASE_URL=http.//192.168.1.10:11434

5.3 Configuring the Big Model

5.4 Setting up the system model

At this point, diffy is associated with the local big model deployed earlier

VI. Creating an application

6.1 Creating a Blank Application

6.2 Application configuration

6.3 Configuring the current application's big model

6.4 Testing

This shows that dedify is connected to the locally deployed big model deepseek-r1, but what about the fact that I want his answer to be based on my private knowledge base as a context to chat with me? This requires the use of a local knowledge base

VII. Creating a local knowledge base

7.1 Adding Embedding Models

Why add an Embedding model?

The role of Embedding model is to transform high-dimensional data (e.g., text, images) into low-dimensional vectors that capture the semantic information in the original data. Common applications include text categorization, similarity search, recommender systems, etc.

The information we uploaded should be converted into vector data by Embedding model to be deposited into vector database, so that when answering the questions, we can accurately get the meaning of the original data and recall it according to the natural language, so we need to vectorize the private data into the database in advance.

7.2 Creating a knowledge base

7.3 Uploading information

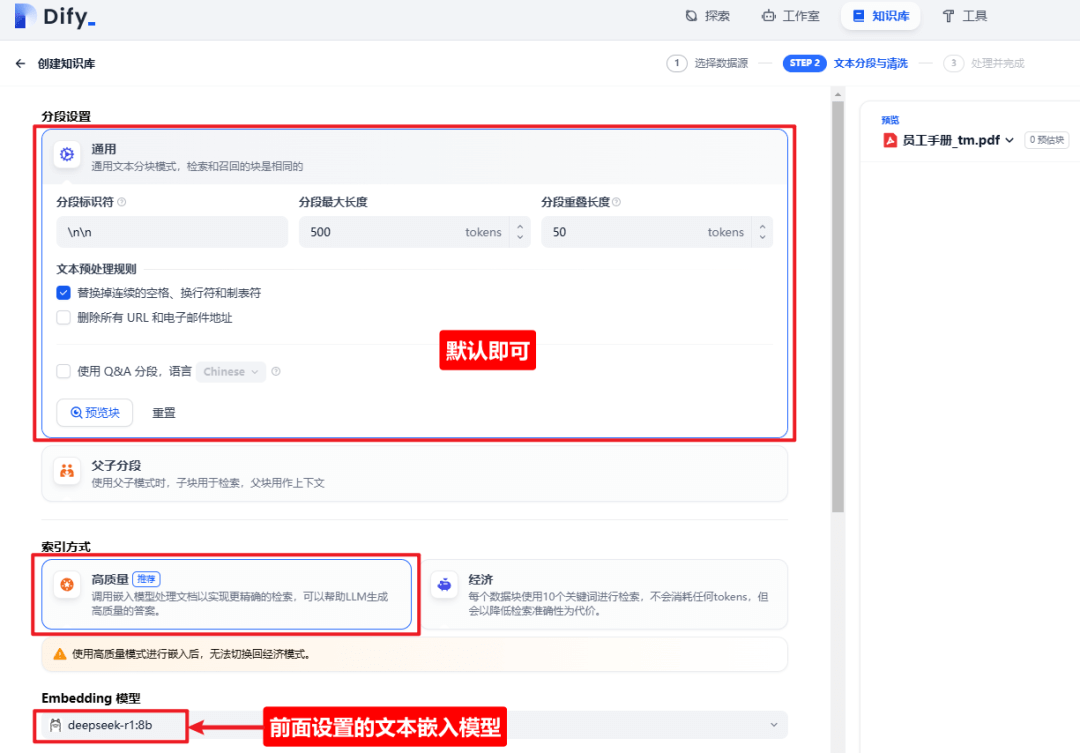

7.4 Preservation and disposal

7.5 Knowledge base creation completed

VIII. Adding the knowledge base as a dialog context

8.1 Adding a knowledge base within an application

Go back to the app chat page you were just on and add the knowledge base

8.2 Saving the current application settings

If you just debug within the current application you don't have to update it, but if you want to publish the current application as an external api service or save it persistently, you need to save the current application settings, remember to save the current settings in real time.

8.3 Testing

The thinking process has a strong deepseek style, as if there is really a very serious person looking through the document, it puts itself through the process of looking through the document, a clear demonstration, and finally give their own conclusions, very rigorous. If it is a specific academic knowledge base, he must also help you deduce, think, summarize, very convenient.

If you feel that the answer is still not satisfactory, you can adjust the recall parameters.

Or you can refer to the official documentation for other detailed settings:

https://docs.dify.ai/zh-hans/guides/knowledge-base

IX. Conclusion

DeepSeek as a large model that can be privatized and locally deployed, together with the combination of Dify, we can have many other application scenarios, such as: intelligent customer service, intelligent question bank.

You can also upload your profile, past output articles, diary and all other personal information to the local knowledge base to create your own personal assistant.

Dify has a lot of other features, and with a great homegrown product like deepseekAI macromodelPlus support, we can do moreintelligent bodyApps. Of course, Dify could also release api's for external services like coze, so that it can make more AI apps quickly in conjunction with cursor.

The only way to break the mold is to take action! I hope you reading this will take positive action to make your own AI app!

Note: This article comes from theDeepSeek Technical CommunityThe

© Copyright notes

The copyright of the article belongs to the author, please do not reprint without permission.

Related posts

No comments...